Cybersecurity: fashion or strategy

April 11, 2024

Ansible with bastion host

This is the second part of my IaC overview based on a personal experiment: building Cyber range using the IaC paradigm. Here are the first and third parts.

In a pure design perspective, the client-to-site VPN approach is still the best. But from an automation perspective, I had to redesign it including a bastion host. I don’t like the idea so much, but the pros are more than the cons.

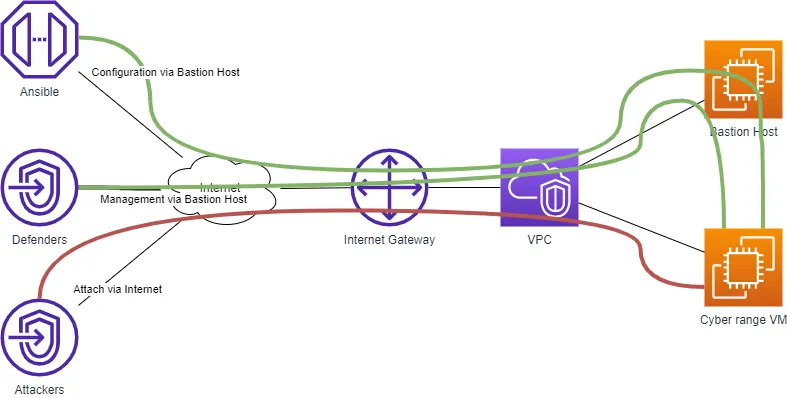

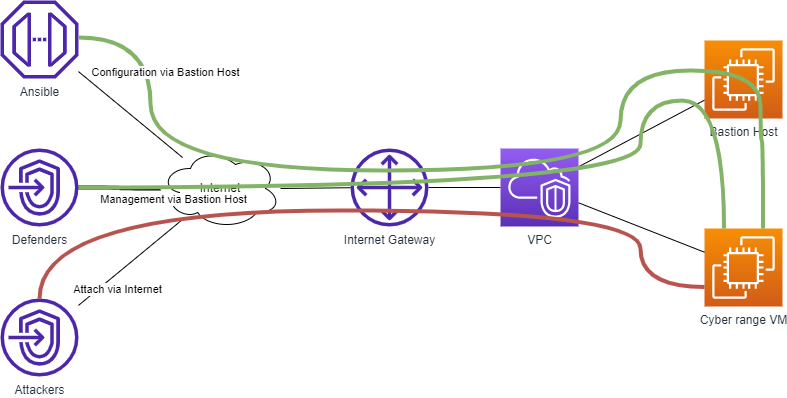

Scenario

Compared to the scenario with the client-to-site VPN concentrator, this one seems simpler: internal VMs are reachable via the Linux bastion host. In practice, the bastion host proxies SSH connections. The bastion host does not require any configuration at all.

Because my scenario requires that attendees can reach internal VMs, I copy into the bastion host the SSH private key needed to log in to the internal VMs. In the future, I think it’s better if the bastion host serves also as an OpenVPN concentrator.

Bastion host and AWS EC2 dynamic inventory

At this point Ansible should:

- configure the bastion host using the public IP address;

- configure the internal hosts using the private IP address via the bastion host’s public IP address.

Because of the Spacelift design, I had to configure everything using a single Ansible playbook. It means that the AWS EC2 Ansible inventory must:

- return the public IP address for the bastion host;

- return the private IP addresses for the internal VMs.

Moreover, Ansible should configure the SSH proxy just before logging in to each internal host.

After several attempts, I find a working recipe. Let’s start with the Ansible inventory:

plugin: aws_ec2

regions:

- eu-central-1

filters:

instance-state-name: running

keyed_groups:

- key: tags

prefix: tag

hostnames:

- tag:Name

compose:

ansible_host: public_ip_address if tags.Name == "bastion" else private_ip_address

Remember I wrote that IaC is 80% plan and standardization? I assume that in every scenario I will use “bastion” as the hostname for the bastion host, and I tag the hostname in the AWS EC2 configuration. This is one of my “standards” (assumptions).

In the above AWS EC2 Ansible inventory configuration, I return the public IP address only if the Name tag is equal to bastion. The inventory returns the private IP address for any other VMs.

My Ansible playbook starts configuring the Ansible host:

- hosts: tag_Name_bastion

gather_facts: no

remote_user: ubuntu

roles:

- role: linux-bastion

tags: always

In the role, I find the available SSH key and upload it to the bastion host for attendees. Remember I wrote that I want a “soft” lock-in? That’s where I make the playbook compatible with Spacelift environments and mine. I also used the tag “always” because I configure ansible_ssh_private_key_file (see after).

Configuring internal hosts via bastion

At this point I can configure internal hosts. Another assumption I made is that tags contains any interesting attributes I use in Ansible to group, query and configure hosts. In practice I’m using the following tags:

Os:ubuntu: for Ubuntu VMs;Database:mariadb: for MariaDB VMs;Webapp:wordpress: for VMs with Wordpress.

My Ansible playbook runs multiple plays:

- hosts: tag_Name_bastion

gather_facts: no

remote_user: ubuntu

roles:

- role: linux-bastion

tags: always

- hosts: tag_Os_ubuntu:!tag_Name_bastion

gather_facts: yes

become: yes

vars_files:

- default.yaml

roles:

- role: set-environment

tags: always

# [...]

- hosts: tag_Os_ubuntu:&tag_Database_mariadb

gather_facts: yes

become: yes

vars_files:

- default.yaml

roles:

- role: set-environment

tags: always

- role: linux-mariadb

tags: mariadb

# [...]

- hosts: tag_Os_ubuntu:&tag_Webapp_wordpress

# [...]

Ansible facts are host specific: it means that if I set ansible_ssh_private_key_file on the bastion host, it is undefined for other hosts.

How could I configure SSH proxy for any internal hosts excluding the bastion host?

The magic happens in the default.yaml file using

Ansible facts and magic variables

. The default.yaml file is included in any playbook targeting internal hosts and it configures the SSH proxy using information from the inventory:

ansible_user: "{{ tags.User }}"

ansible_ssh_private_key_file: '{{ hostvars["bastion"]["ansible_ssh_private_key_file"] }}'

ansible_ssh_common_args: >-

-o ProxyCommand="ssh

-o IdentityFile={{ hostvars["bastion"]["ansible_ssh_private_key_file"] }}

-o StrictHostKeyChecking=no

-o UserKnownHostsFile=/dev/null

-W %h:%p

-q {{ hostvars["bastion"]["tags"]["User"] }}@{{ hostvars["bastion"]["public_ip_address"] }}"

[...]

Remember I wrote that IaC is 80% plan and standardization? I’m still assuming that the bastion host is called bastion, and the tag User contains the remote user for each VM. So, for each VM:

ansible_user: contains the remote username configured in the tagUser;ansible_ssh_private_key_file: contains the local (stored in the Ansible VM) SSH private key and the information is taken from the bastion host entry configured in the inventory;ansible_ssh_common_args: contains the SSH proxy command used from Ansible and configured using the bastion public IP address taken from the inventory.

Both SSH keys must be local to the Ansible host.

At this point, I have a single Ansible playbook that configures my Cyber range scenario using a bastion host.

Conclusions

I find that bastion hosts are commonly used. I don’t like them very much because from a security perspective the bastion host is one more host with superpowers. But from an automation perspective, this is actually the only successful way.

References

- My scripts are not ready to be published, but if you need details, drop me an email.