Cybersecurity: fashion or strategy

April 11, 2024

The value of a Risk Based VA

In this article, I’m explaining why we are executing VA, why the traditional approach is dangerous and how we should manage the risk bound to vulnerabilities.

I’m also discussing a few false myths about VA.

I hope this article can help to approach the vulnerability management process by reducing the unmanaged risk bound to vulnerabilities.

The purpose of a VA

Almost any company should request a yearly VA. To be honest they often request a VAPT (whatever it means). When I try to understand the reason behind this legitim request, I realize that this is not so clear. We are doing a VAPT every year because everybody does.

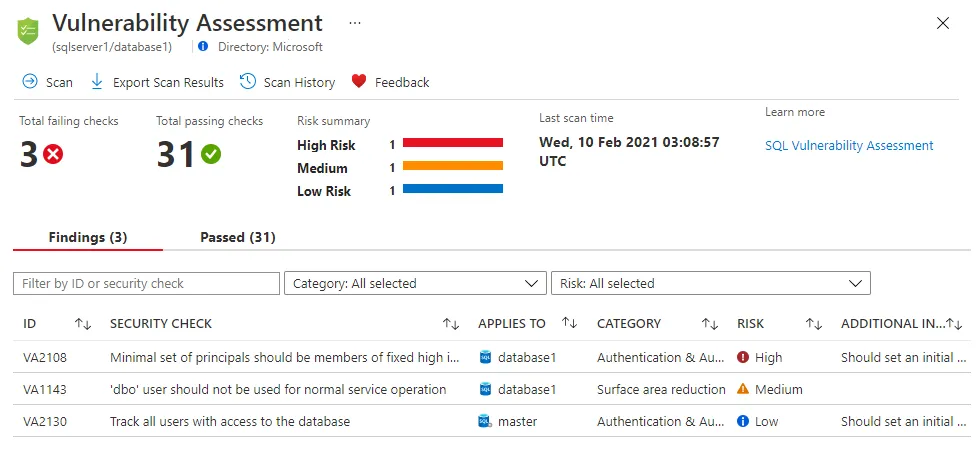

So, let’s start from the beginning: a Vulnerability Assessment (VA) is a measure. To be specific a VA counts the known vulnerabilities present in systems and applications. VA scanners are signature-based: if they find application X, they estimate vulnerability Y.

Yes, VA scanners estimate vulnerability, they don’t make any verification about them. Most modern VA scanners include DAST (Dynamic Application Security Testing): in other words scanners try to break Web applications and report estimated web vulnerabilities.

Finally, VA scanners exclude any custom application and often exclude also popular web frameworks (WordPress, Drupal…). The reason is simple: VA scanners rarely include signatures for a specific web application framework. There are other specialized scanners, for example, WordPress users should use specific scanners like wpscan

The outcome of a VA is a list of detected vulnerabilities:

- a risk estimated value is usually included per each vulnerability (VA are not context-aware);

- custom systems and applications are not evaluated (VA scanners do not include specific signatures);

- risk mitigations are not evaluated (VA is not context-aware).

Legacy approach

In a legacy VA approach, the outcome is the input of a patch management process: we know what is vulnerable, and we need to patch.

We have some issues with this approach:

- Blind patching: patching everything is not sustainable. In practice, IT teams start patching from the easiest using a best-effort approach. The result is that critical, mission-critical systems will be rarely patched (SAP, Oracle, CRM…).

- Context-less: VA outcomes do not consider the in-place security controls. Systems and applications in a high-security zone are patched with the same urgency as the exposed services, just because they are affected by the same critical vulnerability.

- Time-to-patch: assuming a yearly VA, in the worst-case scenario, a critical patch could be considered 1 year after it has been released.

Risk-based VA

In a Risk-based approach, we are adding the context. The outcome of a VA must be enriched with:

- Inherent risk: the risk bound to each specific vulnerability, excluding in place mitigations.

- Mitigation factor: how much the in-place security controls (firewalls, IPSs, WAFs…) mitigate the risk.

- Residual risk: the risk bound to each specific vulnerability, considering all mitigation factors.

We can calculate the inherent risk with: impact x likelihood.

All values are application-dependent, but we can simplify things and assume that:

- Impact depends on the vulnerable networks because once the network is compromised, all included systems can be compromised too.

- Likelihood depends on the vulnerability (available exploits, difficulty to exploits…).

- Mitigation factor depends on the security zone because we can assume security controls are deployed equally to secure a specific set of networks.

- Residual risk is

inherent risk x mitigation factor.

We need to define another value, the risk appetite. The risk appetite is the risk value that the organization is willing to accept.

Using a Risk-based approach, the VA report is enriched (as we discussed before) and ordered by residual risk:

- Vulnerabilities with a residual risk lower than or equal to the risk appetite are discarded.

- Vulnerability with a medium risk must be managed (for example) within 10 working days.

- Vulnerability with a critical risk must be managed (for example) within 3 working days.

A risk-based approach is deterministic and doesn’t leave the risk unmanaged.

False myth: provider rotation

The first false myth I want to discuss is the provider rotation. Many organizations I know change the provider (the company that executes the VA) every year because they believe that a different partner will detect different vulnerabilities. This is wrong, because:

- VA providers use commercial software from Tenable, Rapid7… I’m not saying that all are equals, I’m saying that changing the partner doesn’t mean that the tool is different.

- Even if I change the VA scanner every year, it’s unacceptable to discover vulnerabilities after one more year just because I changed the tool (unmanaged risk).

- Changing the tool means a different VA report format: to evaluate the process I need to correlate KPI between different tools (not so easy).

False myth: VAPT

Organizations are focusing on VAPT only, often referring to legacy VA. As we discussed before, VA scanners are not designed to detect all vulnerabilities that can affect organizations. VA scanners are a precious approach to fastly scanning large infrastructure within an acceptable time, but they are not designed to go deep.

We know that DAST can help, but they are still bound to a specific context.

PT should be considered after the previous steps (VA and DAST) to evaluate critical custom services (HR portals, CRM…).

Organizations that are limited to VA, are not managing the risk bound to custom applications.

False myth: VA as a patch management tool

We mentioned it before: with a yearly VA, we can have unmanaged vulnerabilities for one year (worst case scenario). We can reduce the interval but unless we are executing complete VA every week, we need a different approach.

A working vulnerability management process requires discovering, prioritizing, and remediating vulnerabilities.

VA can be used in the discovery phase, but the right input came from vendor security feeds (Microsoft, Cisco, Red Hat…). We should:

- obtain new vulnerabilities on a daily basis;

- evaluate the associated risk;

- define how we can mitigate (patching, additional security controls…).

Using this approach VAs are used to verify the vulnerability management process, not to start it. Of course, this approach depends on other processes like asset and software management, and change management.

DIY and automate

I’m an automation-addicted guy, and I’m used to automating any boring process I can. In my perspective, large organizations should be able to execute VAs by themselves. And they should automate them from the scan phase to the report phase, including most of the risk assessment considerations.

Let’s try to design the process:

- Asset and software inventories contain what is running within the Organization. Inventories also contain the impact score (the business asset value).

- We can estimate a mitigation factor for each security zone, calculated taking into account in place security controls (firewalls, IPSs, WAFs…). We know that this is not accurate, but it can initially work.

- Based on asset and software inventories, we can get relevant vulnerabilities from vendor feeds.

- Based on the estimated risk score (usually provided by vendors), we can calculate the inherent risk and alert report medium and critical vulnerabilities.

- An analyst should analyze the report and define the next steps.

Conclusions

We learned how to move from the legacy VAPT approach to a risk-based vulnerability management process. We know that it’s not so easy, but we know that the legacy approach is leaving the risk unmanaged. VA scanners are valuable tools if used in the right way. They are dangerous if we are using them as the single input for the vulnerability management process.