Opt-out from Web Archive

April 29, 2024

Speculating about UNetLabv3

My previous post about the UNetLab story become unexpectedly popular. UNetLab has been one of my most important and public projects, and I’m honored so many people all around the world used my homemade project.

If I left the project in 2015, UNetLab is still in my mind, and sometimes I *speculate- on the next UNetLab version. So that’s the post: let’s speculate about what we (I’m including you), would like to see in a hypothetical next virtual lab software.

Additional note: in the last years I moved from networking to automation and finally to the Cybersecurity world, so my needs changed as you can read in the paragraphs below. But I don’t want to focus only on my needs. The “U” in UNetLab stands for “Unified”.

UNetLabv1 limits

Let’s recap the UNetLab limits:

- per host pod limit: currently each host can run up to 256 independent pods, because of the console port limit;

- per lab node limit: currently each pod/lab can run up to 128 - 1 nodes, because of the console port limit;

- per user pod limit: currently each user can run up to one pod/lab at a time, because of the console port limit;

- one host only: currently there is no way to make a distributed installation of UNetLab (OVS could be used, but many frame types are filtered by default);

- config management: getting and putting startup-config is done through expecting scripts, they are slow, non-predictive, and cannot cover all node types;

- Dynamips serial interfaces are not supported;

- no topology change is allowed while the lab is running, by design.

The console port limit happen because each node console have a fixed console port, calculated as following: ts_port = 32768 + 128 - tenant_id + device_id. Moreover, no more than 512 IOL nodes can run inside the same lab because device_id must be unique for each tenant.

UNetLabv3 wishing

So in my mind, and because of my job, a hypothetical UNetLabv2 must be able to:

- Teaching:

- multi-tenancy: each teacher should be able to manage an independent environment;

- classes: within each tenant, users are divided into classes (class-level visibility);

- users should be able to start and (possibly) modify their lab (user-level visibility);

- shared access: users could access the same running lab;

- design mode: changing a lab should not affect running copies and any lab could be converted into a “template”;

- solo-mode: non-teacher should still be able to write and run labs without worrying about classes, students… (most of the UNetLab users are using it for themselves);

- content writer: labs should include additional content (slides, video… Udemy style);

- auto-evaluation: teacher could include scripts to auto-evaluate users.

- Labbing:

- live changes (jitter, delay, interface up/down), should immediately reflect the running lab;

- serial interfaces (also between IOU and Dynamips).

- Marketplace: a place where users and teachers can share labs and learning paths (Udemy style).

- Automation: all relevant nodes within a lab should be able to be reached by automation software by default.

- Packet capture: users should be able to capture packets from any specific interface (the old UNetLab doesn’t have this capability).

- Scale-out: labs could be run on multiple computing nodes;

- Support for:

- images: IOL, Dynamips, Docker, VM;

- hypervisor: Linux QEMU/KVM and VMware vSphere.

- Open source and community-driven.

Implementation

So, how would I approach the above prerequisites? Let’s try to speculate:

- teaching - multi-tenancy: easy, I used the same approach with UNetLabv1;

- teaching - classes: easy, it’s just a sort of “nested” multi-tenancy (users - classes - tenants);

- teaching - shared access and design mode: easy, just need to clone the lab and assign it to the user;

- teaching - design mode: easy, just copy all running nodes as template lab (same tenant scope);

- teaching - solo mode: easy, it’s the design mode;

- labbing - live changes: not all images support interface status and jitter/delay features must be implemented;

- labbing - serial interfaces: hard, a packet manager must translate communication between IOL and Dynamips (not sure it makes sense);

- marketplace: hard, it requires competencies (mind that I’m not a developer) and infrastructure;

- automation: medium, if all images come with a pre-configured management interface, the back-end should assign and track MAC/IP addresses (maybe a DNS service is needed too);

- packet capture: easy, the logic already supports that;

- scale-out: hard, see later;

- support - images: easy, UNetLabv1 already support them, but not sure Dynamips still make sense;

- support - hypervisor: medium, it requires redesigning the provisioning framework (in my mind Ansible could be a good tool).

- a community-driven: hard, with UNetLabv1 I failed to make a community and collaborators.

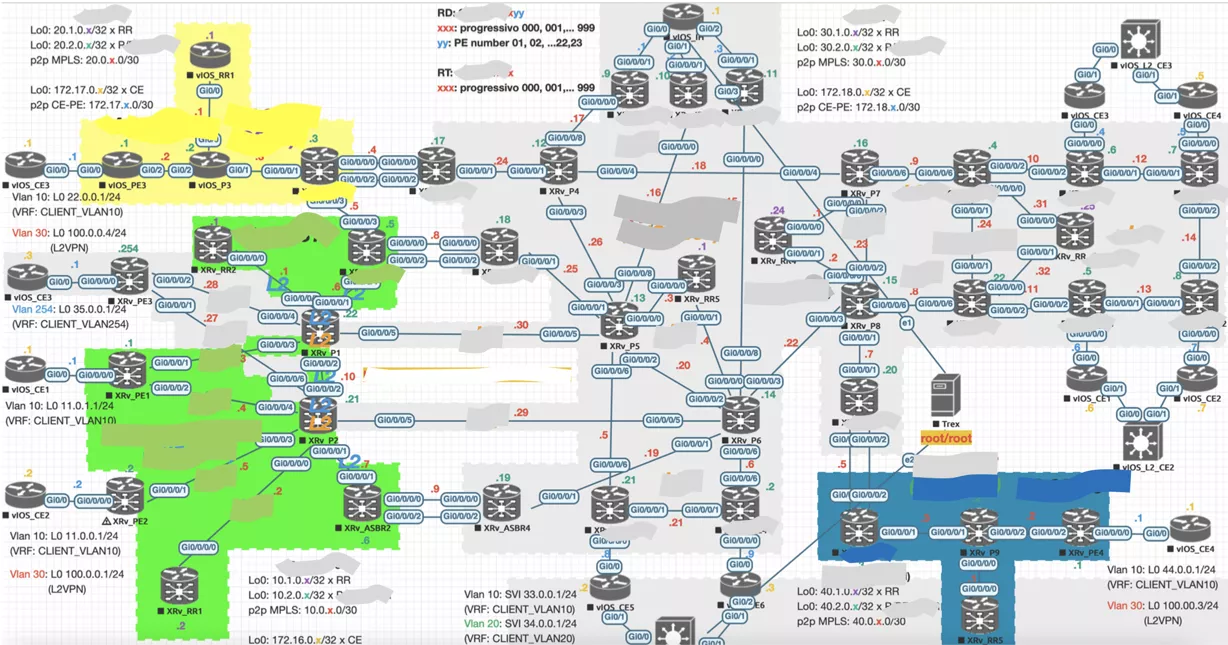

Scale-out and multi-hypervisor

I spent hours figuring out how to scale out and support multiple hypervisors. I want to support VMware vSphere because it’s popular inside data centers and enterprises could use the same infrastructure dedicated to R&D. I want to support QEMU/KVM too because they are more flexible and they can emulate a sort of “virtual” L1 links. For example: creating an LACP channel on vSphere is not possible because LACP frames are dropped by default; on Linux, I can patch the kernel to switch any frame (UNetLabv1 includes this kernel patch).

Extending a lab between different computing nodes opens a question: how to extend “virtual” L1 links? In the UNetLabv2 approach, I decided to approach this problem by implementing a sort of MPLS encapsulation. This approach has some limits:

- it’s slow because switching is done at the user level (not kernel level);

- it’s complex;

- it’s completely proprietary (vSphere will never support that).

So I chose a different approach:

- the infrastructure is responsible to extend L2 networks (VLAN) using any technology available (VXLAN, Cisco OTV or ACI, VMware NSX…);

- the UNetLabv3 nodes use 802.1Q trunks to send data between nodes;

- “virtual” L1 links are allowed only on QEMU/KVM nodes and only within the same node;

- trunking L2 links are allowed only within the same node;

- nodes on different computing nodes can communicate using specific dedicated VLANs (tagged by the computing nodes).

With this approach, users can design labs and optimize them to span between multiple computing servers. Moreover, any DCI technology can be used regardless of the vendor/provider… UNetLabv3 is simply not implementing DCI, it’s just using what the infrastructure provides.

Other considerations

Many prerequisites I described above require a sort of automation tool. To be more specific I need that:

- users can connect to the lab and can route packets through the lab reaching all nodes (not only for automation labs but also for Cyber-range);

- node consoles, when applicable, must be provided in a terminal-server style (I don’t like HTML5 consoles too much);

- DNS/NTP/DHCP services are provided in each lab (I’d love to automatically assign an FQDN to any running node).

In my mind in each lab is deployed a special (gateway) node, responsible for the console and the routing. OpenVPN is used by users to connect to the gateway node. I have to check if OpenVPN supports VRF or if multiple OpenVPN instances are required to support multi-tenancy. The gateway could use OSPF to receive lab networks and originate a default gateway route.

Conclusions

Developing UNetLab was one of the best hobbies I ever had. I met a lot of interesting people, most of them I’m still in touch with. Moreover, it allowed me to understand how critical is to maintain software used all around the world without disappointing users because of a bug. But even so, sometimes I dream about developing UNetLab again.

But, as you know, I’m just speculating…

If you have additional prerequisites I didn’t consider, drop me an email or write me on LinkedIn .