Opt-out from Web Archive

April 29, 2024

Unified Networking Lab (UNetLab): the story

I develop network emulators since 2011, and, even if I’m not a programmer, I can say I did a good job, putting iou-web (at first) and UNetLab (at last) as a good competitor for GNS3 and VIRL without any budget.

If you’re looking for EVE-NG please follow the link .

Below you can read a summary of my software, and the architecture of the UNetLabv2.

UNetLabv2 has the same UNetLab features, plus:

- thousands of nodes for each lab;

- labs distributed between dozens of physical or virtual nodes;

- unlimited running labs for each user;

- support for Ansible/NAPALM/… automation tools

Previously on WebIOL/iou-web

At the end of 2011, I needed a tool to emulate networks. Prerequisites were:

- portability: labs must be exportable and importable;

- stability: running labs must be stable, avoiding crashes;

- performance: labs must be able to run into a cheap laptop or a VM.

GNS3 was the only available option, and it couldn’t satisfy all prerequisites. I decided to develop a new network emulation software based on IOL (IOS on Linux, by Cisco). I knew many Cisco guys, and I would love to be part of the Cisco family, sooner or later. In the meantime, I realized Cisco developed internally a similar software, called WebIOL.

WebIOL was developed in Perl and released for Cisco employers only. It contained some basic labs from Cisco 360 program.

After a couple of alpha versions, on January 23rd (2012) iou-web has been released to the public. iou-web (not webiou or web-iou) was developed in PHP and in a few months counted hundreds of users worldwide. If GNS3 supported real IOSes only (via Dynamips), iou-web-supported IOL only. But because IOL was faster than Dynamips, many users preferred iou-web.

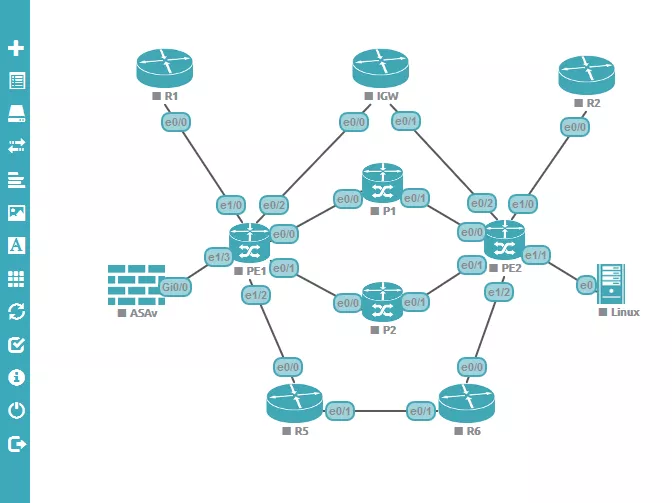

UNL/UNetLab/EVE-NG

In 2013 I realized iou-web was too limited, I needed a “unified” way to emulate a network, including firewalls, load-balancers, and so on. Moreover, multicasting using IOL was bugged. I had more prerequisites:

- include vendor virtual appliances in labs;

- include real IOS in labs;

- include (possibly) emulators from different vendors;

- multi-user.

I entirely rewrote iou-web to make an extendable network emulator system. The idea was:

- each node (emulated device) must be attached to a common layer;

- a nice common layer could be an Ethernet Linux bridge (OVS was supported too).

On October 6th (2014) UNetLab has been released to the public. Developed in PHP using a REST API framework, and a single page application (jQuery). In a few months, I was able to count five to six hundreds daily users. I would name it UNL, but the website wasn’t free.

Initially, I had the idea to include Huawei eNSP, but because eNSP nodes expect a particular string, no one was able to run eNSP nodes outside eNSP.

In 2015 I didn’t have enough spare time for UNetLab, so a group of guys forked UNetLab and on January 5th (2017) EVE-NG has been released to the public, but that’s another story because I’m not part of EVE-NG team.

In 2014 I had an interesting chat with a guy from the Cisco VIRL team during CLEUR 2014 (Milan).

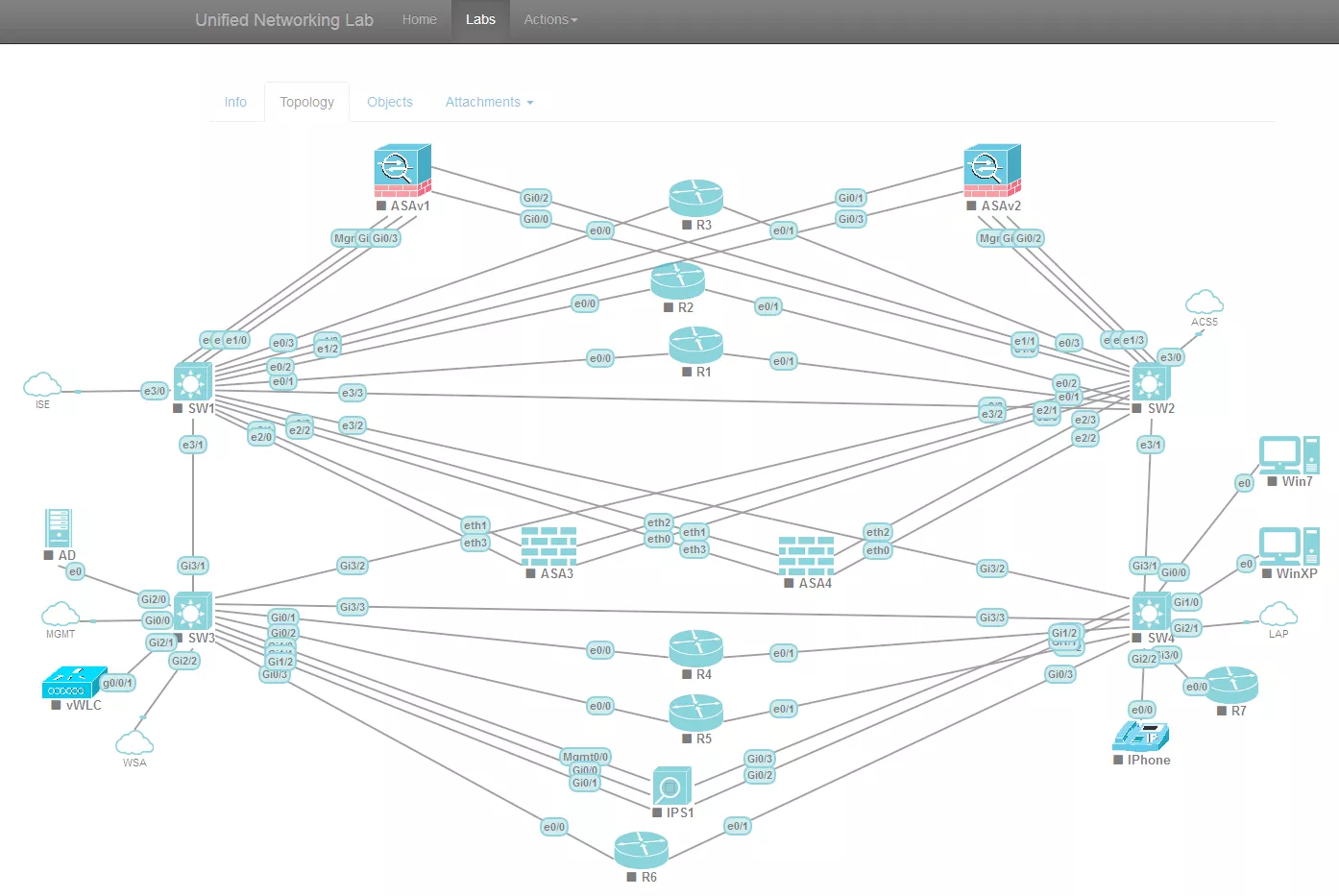

Why UNetLabv2

In the last two years, my focus moved to network automation, and now I still need a unified network emulator platform but the prerequisites changed. But before that, let me point out UNetLab limits:

- per host pod limit: currently each host can run up to 256 independent pods, because of the console port limit;

- per lab node limit: currently each pod/lab can run up to 128 - 1 nodes, because of the console port limit;

- per user pod limit: currently each user can run up to one pod/lab a time, because of the console port limit;

- one host only: currently there is no way to make a distributed installation of UNetLab (OVS could be used, but many frame types are filtered by default);

- config management: getting and putting startup-config is done through expect scripts, they are slow, non-predictive, and cannot cover all node types;

- Dynamips serial interfaces are not supported;

- no topology change is allowed while lab is running, by design.

The console port limit happen because each node console have a fixed console port, calculated as following: ts_port = 32768 + 128 * tenant_id + device_id. Moreover, no more than 512 IOL nodes can run inside the same lab because device_id must be unique for each tenant.

So UNetLab v2 must be able to:

- run a distributed lab (between local or geographically distributed nodes);

- run lab with a non-limited number of nodes;

- allow each user to customize a lab without affecting the original copy;

- link serial interfaces between IOL and Dynamips;

- configure nodes via Ansible/NAPALM/whatever.

I knew how to make a distributed network emulator, but I missed a bit: how to easily run nodes within a dedicated namespace? I got the answer from vrnetlab: using Docker.

Because of UNetLab limits, I preferred to rewrite UNetLab from scratch, again. Even if EVE-NG is a UNetLab fork, the EVE-NG team is working to overcome the limits described before.

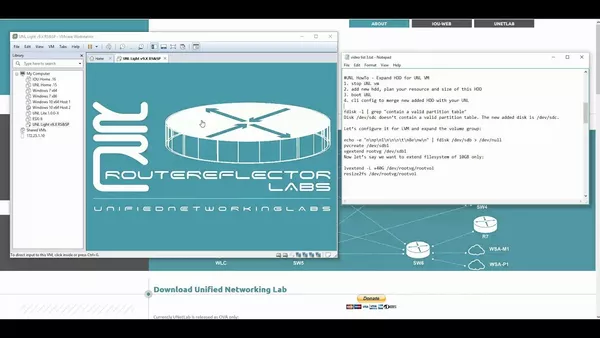

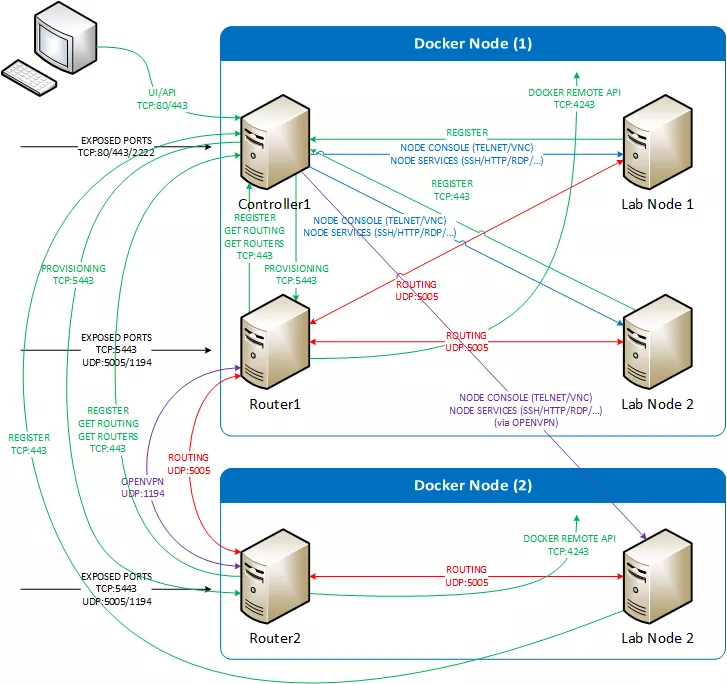

UNetLabv2 Architecture

The architecture could seem a little bit complex, but that’s not true, in fact I was able to implement it by myself and in a relatively short time.

UNetLabv2 is based on:

- Docker: controller, routers and lab nodes run inside a Docker container;

- Python: no more C, PHP, or Bash, only Python 3;

- Python-Flask + NGINX implement and expose APIs;

- Memcached caches authentication for a better user experience;

- Celery + Redis manages asynchronous long tasks in the background;

- MariaDB stores all data/user and running labs;

- Git stores original labs with version control;

- jQuery + Bootstrap will implement the UI as a single page app;

- iptables + Linux bridge allow to connect to just started lab nodes via SSH;

- IOL, QEMU, and Dynamips run lab nodes.

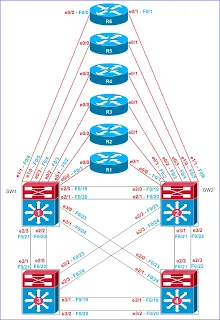

A UNetLabv2 cluster must have at least one node: it could be a physical or a virtual system. Each UNetLabv2 node run Docker: the first UNetLabv2 node is the master one and it contains a controller and a router, every additional UNetLabv2 node contains a router only. Each UNetLabv2 node run also many lab nodes. Controller, routers, and lab nodes are Docker instances.

The controller container:

- exposes web UI and APIs to user clients, routers, and lab nodes;

- receive register requests from routers and lab nodes;

- provision and manages lab nodes via routers;

- contact lab nodes via Ansible/NAPALM/… via routers;

- provides routing table to routers;

- can be reached via SSH using port 2222.

The router containers:

- register against the controller;

- expose Docker Remote API;

- expose lab node API;

- get routers and routing table from the controller;

- route lab packets between nodes and routers;

- allow reachability between controller and remote lab nodes via OpenVPN.

The node containers:

- register against the controller;

- run the emulated node (IOL, Dynamips, or QEMU);

- bind a management interface of emulated notes to a local bridge;

- route and sNAT/dNAT packets to the management interface of the emulated node;

- route packets between the local router and the emulated node for the non-management interfaces;

- manage emulated links (up/down).

Because controller and routers know inside and outside IP addresses; users can deploy UNetLabv2 nodes wherever they want (in the same LAN, in AWS, Google Compute Engine, Azure, behind a firewall…). As the above diagram explains, each UNetLabv2 cluster needs the following ports:

- TCP:2222 to connect to the controller via SSH;

- TCP:80/443 for HTTP/HTTPS requests to the controller;

- TCP:5443 for HTTPS requests to the router;

- UDP:5005 for routed packets between different UNetLabv2 nodes;

- UDP:1194 for network reachability between the controller and remote lab nodes.

UNetLabv2 is discontinued and thus not available to the public. Don’t ask for it and go with GNS3 , VIRL or EVE-NG .