The cost of complexity: Ansible AWX

May 05, 2024

Designing a cloud provider with both VMware NSX and Cisco ACI

A few months ago I had a chat with a customer about IAAS. The customer wanted to change its business model from an internal ICT only, to an IAAS style ICT, for both internal and external users.

The prerequisites from the customer were:

- infrastructure distributed into two data centers, 30km between them;

- able to transparent move workload between the two data centers;

- users should be able to do everything via a self-service portal.

Of course, the above prerequisites are not enough. Customers often don’t know what they want. After an additional chat, we agreed on:

- High Availability Zones: each data center must be designed to be completely independent. Data centers are interconnected with redundant 100GbE.

- Mobility: workload could be transparently moved from one data center to the other one. Tenants usually run on one data center only, except when moving. Latency is accepted because that’s considered a temporary and planned event. The idea is: that users will get space in a data center, but they can be moved to the other one. Users cannot buy space in both data centers to deliver distributed applications.

- Physical devices: physical devices can be added to specific tenants. Examples are: ISP routers, Oracle Exadata, IBM AS 400, HSMs…

- Connectivity: each tenant can be reached from the external via remote access VPN and site-to-site VPN. Also, external connectivity could be used, like MPLS/VPLS/… Each tenant can rent some public IP addresses, or just use the assigned ones. Tenants could be interconnected together if they have non-overlapping IP addresses.

- Storage: fiber-channel storage is not stretched between data centers.

- Self Service Portal: users won’t be able to do “everything” but only a set of defined actions.

- Backup and Disaster Recovery: users can buy backup (BaaS) and/or disaster recovery (DRaaS) features on a per VM basis.

The very High-Level Design (HLD)

The choice of technology should not be discussed at this level, but because every technology has its own prerequisites/features/limitations/peculiarities, we cannot go far without making assumptions.

The “mobility” prerequisite is one of the most stringent: moving workloads without disruption (vMotion), means that LANs must be stretched. To stretch LANs, one of the following can be used:

- standard VXLAN

- Cisco ACI

- VMware NSX

This post wants to focus on both VMware NSX and Cisco ACI, so the standard VXLAN will not be discussed. But the step between standard VLAN and Cisco ACI or VMware NSX is short.

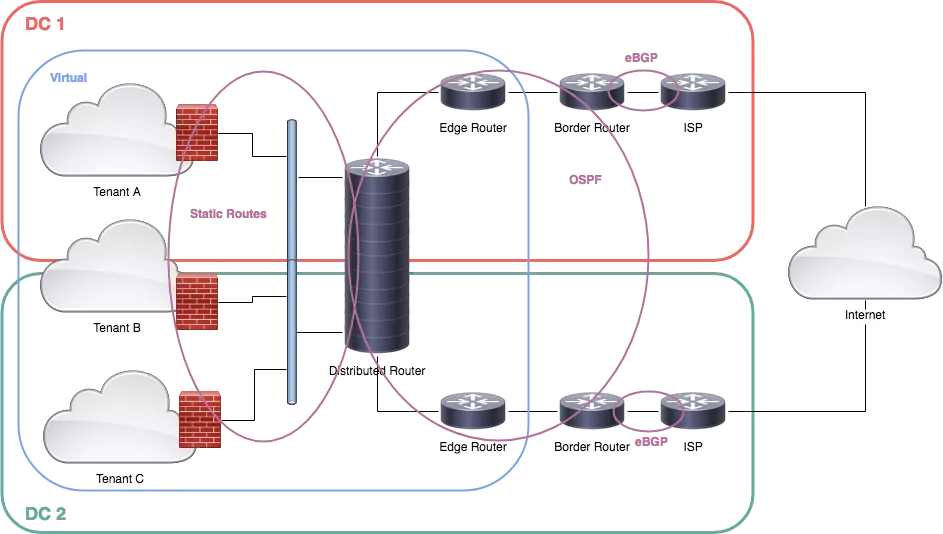

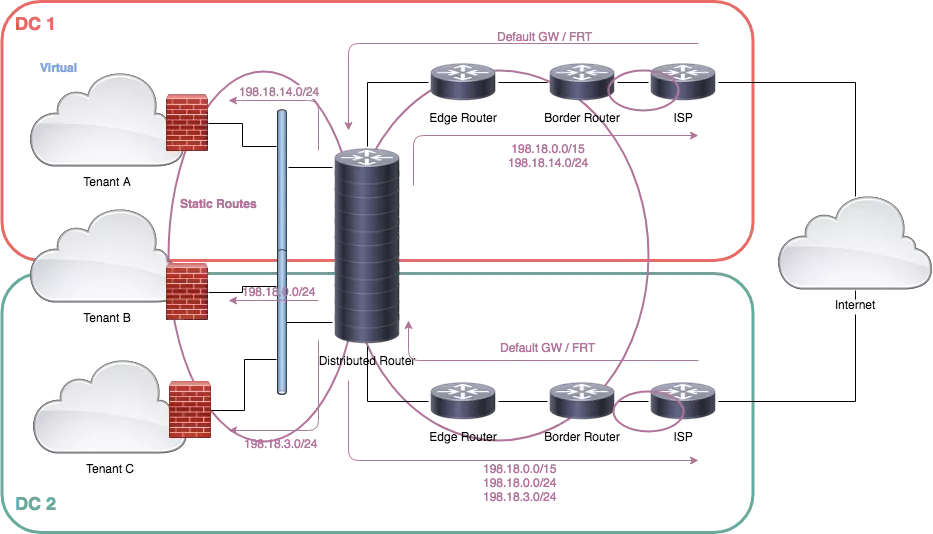

Let’s start to design a big picture:

Each data center is connected to the Internet using one or more ISP, announcing via BGP the same prefixes from the border routers. The border routers are connected to the inside routers and then to a distributed router.

Each tenant is isolated by a firewall, connected to the distributed router.

The diagram is inspired by VMware NSX, so let’s start to discuss it in detail.

VMware NSX

Let’s see again the diagram:

Each border router is connected to one or more ISP routers. Both border routers are announcing the same prefixes with the same AS. In other ways, from the Internet, both data centers are seen as single data centers with two independent exits.

Border routers will announce also users’ prefixes directly. Because of NSX limitations (discussed below), BGP peering between tenants and border routers is not permitted.

Both border routers are also running OSPF, each one peering with an edge router. An edge router (in the NSX world) is a clustered VM acting as a router with some additional features like NAT, firewall, load balancer, IPSec concentrator…

In this design, both edge routers are just routers, nothing more.

Both edge routers are peering (OSPF) with a distributed logical router (DLR). A DLR is a router stretched on one or more physical servers running ESXi. On the other side, the DLR is connected to a stretched network.

Each tenant has its gateway, implemented by a non-VMware firewall.

Let’s now discuss some VMware NSX limits and the above design choices. Mind that limits can now be changed, so feel free to add your opinion by commenting on this post.

- The edge routers cannot be automatically moved (vMotion) between data centers: in other words, a workload cannot be moved from DC1 to DC2 without manual intervention. It’s still possible to destroy a cluster of edge routers and recreate it in the other DC, using the old configuration, but obviously, this is a disruptive approach. Using non-VMware clustered firewalls allows moving one node at a time. Edge firewalls can also offer remote access VPNs, site-to-site IPSec VPNs… Because the firewall could be moved to the other data center without changing any IP address, the “outside” network must be stretched between data centers.

- The DLR cannot run OSPF facing the firewalls, so static routing is the only option. Each firewall uses the DLR as a default gateway, and the DLR forward packets destined to the public IP address assigned to each tenant. Also, the DLR cannot peer with border routers directly. That’s why edge routers are used between DLR and border routers.

- Because the smaller network that can be announced to Interned is a /24 network, we can assume that more tenants could share the same /24 network. In this case, we should try to run all those tenants in the same DC. The nearest border router will announce a more specific network so all traffic will enter from the right link.

Distributed firewall with VMware NSX

VMware NSX provides a distributed firewall to implement micro-segmentation. It sounds great, but the distributed firewall policy is one, shared by all tenants. In a multi-tenant environment, having a single policy is not a great idea, because every user has specific needs, and the policy cannot grow forever.

The distributed firewall wasn’t included in the feature list, but I think that it is still a nice to have feature. How can we adapt a single policy firewall to a multi-tenant environment?

We could use tags. Each VM can be tagged and get specific firewall rules.

Assume for example the following tag:

- server_HTTP

- server_HTTP_Proxy

- client_HTTP_Proxy

- allow_any

We can write a policy like the following:

- VMs tagged with allow_any will receive any traffic.

- VMs tagged with server_HTTP will receive HTTP connections.

- VMs tagged with server_HTTP_Proxy will receive connections from client_HTTP_Proxy VMs only.

In this scenario, referred to distributed firewall only, not to the edge firewall, each user knows how the policies are, and tagging the VMs can honor or bypass the firewall.

Availability zones with VMware NSX

Because one of the prerequisites is the independence of data centers, we must design the infrastructure to be alive even if an entire data center is lost.

Please remind that users will be able to run VMs only from one data center at a time. Enabling users to run VMs from both data centers is not so difficult, but that’s not part of the prerequisites.

In the VMware NSX world we have:

- NSX Manager: it’s the management plane for the network. Each NSX manager is bound to a single vCenter.

- NSX Controllers: they are the control plane for the network. Three controllers must exist, two must be alive. If one or no controllers are alive, all VMs “should” run fine if nothing changes (i.e. no VMotion, no power on…).

- vCenter

- PSC

- Active Director

- vCloud Director / vRealize Automation

- …

Because we need two independent data centers, we need one NSX Manager and three NSX Controller per data center. In this configuration, we must be sure we can move (vMotion) VMs between data centers. In other words, we must be able to move VMs between:

- vCenters (that’s fine)

- vCloud Director / vRealize Automation (probably we cannot do that, the best option we have is a disruptive export and import).

Moreover, we have to define virtual networks as universal. In previous vCloud Director and vRealize Automation versions, users cannot create a universal virtual network. The universal virtual network must be manually created by an administrator.

If we relax the prerequisite we can have a single stretched infrastructure, with one NSX Manager, three NSX Controllers, and one vCenter. In this case, VMs can be moved without issues but we must consider how to recover from a data center loss of all unique infrastructure VMs (NSX Manager, NSX Controllers, vCenter…).

To be more specific, one data center will run two NSX Controllers, the other one will run just one NSX Controller. If the first data center is lost, the NSX Controllers cluster will lose the quorum. At this time, one NSX Controller must be manually redeployed in the surviving data center.

Backup and Disaster Recovery

Backup VMs and mirroring them into the remote data center is not too difficult. The hardest part is to restore a backup or activate the VM in the remote data center.

As I mentioned before, VM could be imported into vCloud Directory / vRealize Automation, but deep tests must be run to check if everything is going fine or not. I’m referring especially to tags, networks, service portal accounting…

Onboarding

Onboarding service is usually omitted from many designs. As an architect, I can say that this is the most critical part for many users: how to migrate a physical and legacy data center to a cloud provider with minimal disruption? I should mention also that many enterprises don’t know what is running in their data centers, and often they don’t have the skills to plan a move. This can be a business opportunity and an enabler for the IaaS service.

Referring to VMware, there is a lot of good software like Veeam, Zerto, and so on. All of them have cost, features, and compatibility lists.

Physical devices attached to VMware NSX

Last but not least, VMware NSX allows extending L2 networks to a physical domain. High availability and convergence timers must be tested to be sure they are compatible with SLA.

Even if this works fine, it seems to me they are not easy to maintain if the number increases a lot. Moreover, they probably must be manually configured because they are not part of the default vCloud Director / vRealize Automation scripts.

Conclusion for the VMware design

I hope you know understand why this design is closely related to technological choice. If running the infrastructure can be more or less vendor-independent, when we add service portal, backup, disaster recovery, and onboarding… we must evaluate much software and check if and how they can work together.

Also, deep customization can be an issue: if we develop scripts for any single action we need to give to users, what can we assure that all scripts will work after a release upgrade?

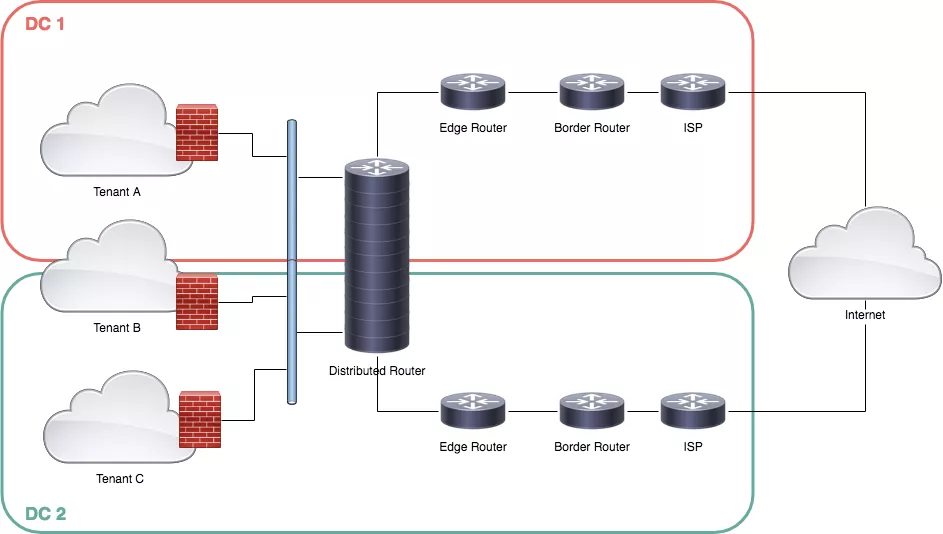

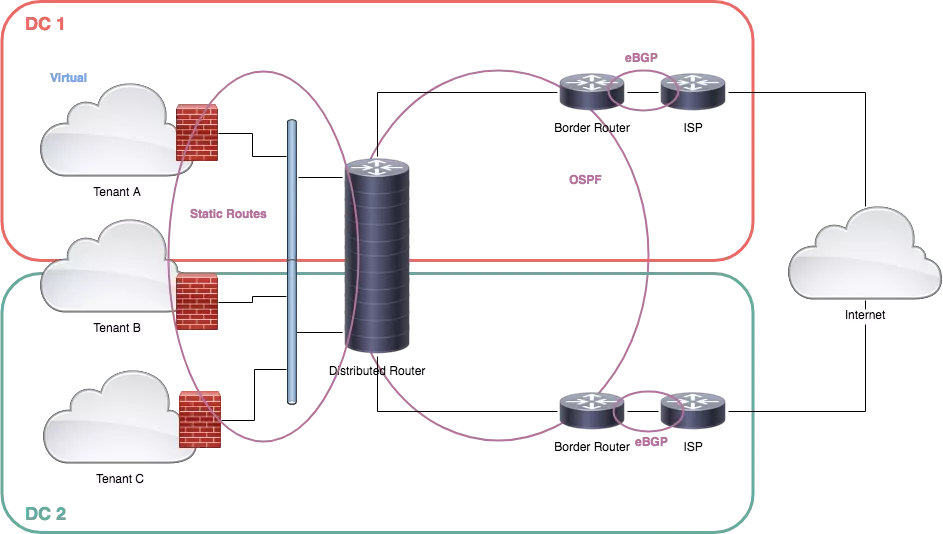

Cisco ACI

Cisco ACI completely split the fabric configuration from the tenant configuration. Once the fabric has been deployed, each tenant can get its contracts, policies, and so on.

If VMware has an edge gateway included (not used, however), Cisco ACI has no way to NAT addresses.

Because of the VMware NSX (see above) and Cisco ACI limitations, the design is pretty similar:

With Cisco ACI border routers can now peer with tenants also, so users can announce their prefixes by themselves.

The edge routers are not needed anymore because ACI can peer with external routers directly. With this design, customer can announce their prefixes directly, but the border router must be configured admin transit AS for traffic sourced or destined to the users.

Because Cisco ACI cannot NAT IP addresses, a gateway for each tenant is needed. Virtual or physical firewalls can be used, as we discussed before in the VMware NSX paragraph.

If the infrastructure is relatively easy to design, the most critical part is once again related to the software: what should we use to virtualize VMs, implement a service portal…

Once again VMware’s suite is a good choice, but we can also use OpenStack, OpenNebula, CloudStack…

If a 100% VMware suite can work mostly out of the box, we probably won’t see a CloudStack + Cisco ACI service portal ready to be used.

Distributed firewall with Cisco ACI

If the VMware NSX distributed firewall has a single policy, with Cisco ACI each tenant gets its own separated rules.

Availability zones with Cisco ACI

To fully satisfy the prerequisites, a multi-site fabric must be deployed, with three APIC controllers per site.

On the top, a Cisco ACI Multi-Site policy manager can be deployed to manage policies on both data centers from one location only. Of course, the policy manager must be recovered if one site goes completely offline.

If we relax the prerequisite we can deploy a multi-pod fabric. Because each fabric must have at least two running controllers (I’m not discussing here how a five controller fabric works), once again one data center will get one controller only.

But with Cisco ACI, the controller is the management plane. The fabric can work fine even without controllers. And we can add a fourth APIC with the standby role (I guess it’s still a manual procedure turning it from standby to active).

If only one controller exists, the management can be read-only.

Backup, Disaster Recovery, and Onboarding

We already discussed these topics in the VMware part. More or less with Cisco ACI, all the above considerations are still valid, but because Cisco is not providing a full suite, more development is probably required. That’s not necessarily a bad thing.

Physical devices attached to Cisco ACI

If interconnecting physical devices to a VMware NSX environment is an “exception” to the virtual world, Cisco ACI is entirely part of the game. In other words, the number of physical devices can increase a lot without any issue.

Conclusion for the Cisco ACI design

If VMware offers a more complete suite to cover this design case, Cisco ACI offers a more flexible infrastructure without any additional software.

Both solutions have strong limits, and both require to develop much integration.

Conclusions

I wrote this post to share ideas and experiences made in the last few years. It cannot be completed and it probably is already outdated. Anyway, it should give you some details on where to start.

Today there are no out-of-the-box solutions for this case, so more prerequisites should be relaxed. My opinion is that with a 100% virtual world, VMware is maybe the best solution nowadays. But with an important part of physical servers or devices, Cisco ACI is more flexible.